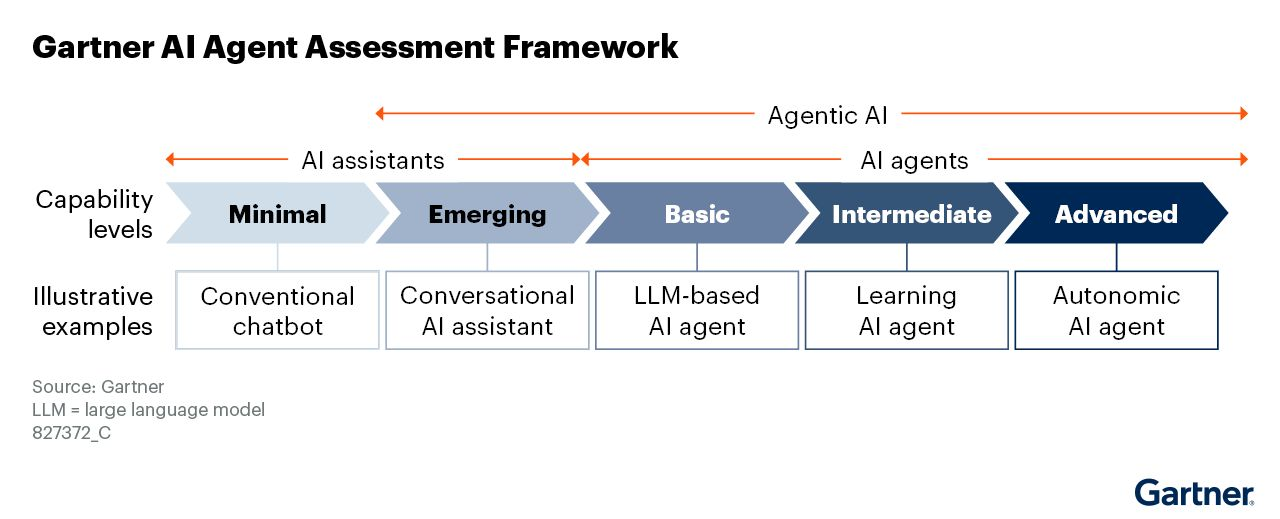

Amid today’s boom in artificial-intelligence solutions, it’s increasingly hard to compare products that parade under similar labels—“assistant,” “bot,” “agent,” “copilot”—yet carry hugely different behaviors, costs, and risks. To bring order to this confusion, Gartner created the AI Agent Assessment Framework, a model that maps the functional evolution of conversational systems across five capability levels: Minimal, Emerging, Basic, Intermediate, and Advanced. The framework not only standardizes vocabulary; it also helps business and technology leaders align expectations, prioritize investments, and craft a realistic adoption roadmap. Below, we examine each level, highlight the technical requirements, and discuss how organizations can move safely from one stage to the next.

1. Minimal — Conventional Chatbots

The Minimal tier represents first-generation, rules-based or decision-tree bots. They recognize keywords, fire off scripted responses, and—at best—escalate the user to a human when they hit a wall. Their value lies in closed Q&A loops: checking an account balance, supplying opening hours, issuing a payment slip. Lacking natural-language understanding (NLU) or any learning capability, they demand constant manual upkeep. Even so, they’re useful when the aim is to deflect high-volume, low-risk inquiries at scale.

2. Emerging — Conversational AI Assistants

At the Emerging stage, we encounter conversational AI assistants. These systems embed NLU engines that detect intents, extract entities, and handle more linguistic variation. They also maintain session context, enabling slightly more fluid dialogues. Yet they remain reactive: they respond only when prompted, can’t plan complex actions, and don’t expand their knowledge without human oversight. This tier suits organizations that need to break free from rigid scripted bots but still require tight governance over answers and compliance.

3. Basic — LLM-Based AI Agents

The Basic label marks the arrival of true AI agents, powered by large language models (LLMs). The system can reason over open instructions, draft novel text, compose emails, or summarize documents. Instead of selecting canned replies, it generates responses in real time, boosting personalization. Memory, however, is still volatile—tied to the LLM’s context window—and the agent doesn’t yet teach itself new processes. Basic agents shine in creative use cases such as detailed tech support or marketing content generation, provided prompt-engineering controls and human validation are in place to curb hallucinations.

4. Intermediate — Learning AI Agents

At Intermediate, the agent gains learning layers: implicit user feedback, reinforcement-learning tweaks, or continual fine-tuning on domain data. A long-term memory—vector stores, knowledge graphs, or relational databases—allows it to recall past interactions and adapt to new patterns. Practically, it begins to suggest routines, prioritize requests by history, and autonomously orchestrate API calls. The leap requires robust infrastructure (data pipelines, observability, continuous A/B testing) and strict privacy policies, because the model now stores sensitive information. Here, productivity can rise exponentially—but so can the challenge of governing a self-modifying system.

5. Advanced — Autonomic AI Agents

The pinnacle is Advanced, occupied by autonomic agents. Inspired by autonomic IT systems, these agents set goals, break them into tasks, select external tools (databases, microservices, even other agents), and monitor outcomes to perform self-healing and self-optimization. They operate over days or weeks, planning and executing, say, an end-to-end email campaign while tweaking tone in response to engagement metrics. Achieving such autonomy demands multi-layer verification architectures (explainable chain-of-thought), granular permission systems, and explicit domain boundaries to prevent conflicts with business or ethical rules. Few organizations are ready for this stage, but it signals the long-term destination of Agentic AI.

Assistants vs. Agents—Why the Distinction Matters

In Gartner’s diagram, one arrow spans the first two levels and labels them AI assistants, while a longer arrow covers Basic through Advanced under AI agents. The dividing line is autonomy: assistants boost human productivity but never act on their own; agents pursue goals with minimal supervision. Blurring the distinction breeds frustration—business teams expect a bot to “solve problems” solo when it was only built to answer FAQs. Conversely, giving an autonomous agent a regulatory workflow without safeguards risks serious violations. Naming each solution accurately is an act of governance.

How to Progress from One Level to the Next

The framework is more than diagnostic; it’s a roadmap. Organizations anchored in Minimal should prioritize intent-data collection and migration to NLU, preparing the leap to Emerging. Those operating in Basic must invest in feedback pipelines, model versioning, and observability to reach Intermediate. The final advance to Advanced inevitably involves integration with transactional systems, ethical guardrails, and zero-trust policies for API calls. Across all phases, success must be measured by business-aligned KPIs—from cost-per-ticket reduction to incremental revenue generated by the agent.

Strategic and Governance Implications

Adopting the Gartner framework eases conversations between technical and executive teams, translating algorithmic jargon into digestible complexity stages. For the CIO, it signals infrastructure investment levels. For the CISO, it illuminates data risks and the need for interpretable audits. For the CHRO, it forecasts shifts in employee experience as repetitive tasks migrate to agents. Product and marketing teams gain clarity on when to layer in new capabilities without overloading the roadmap.

Key Takeaways

The journey to Agentic AI is incremental, not an instant leap to a “do-everything bot.” By classifying systems as Minimal, Emerging, Basic, Intermediate, or Advanced, the Gartner AI Agent Assessment Framework offers a starting point for every organization to pinpoint its current stance, identify gaps, and prioritize initiatives. Ultimately, the goal is to empower people and processes with machines that evolve from mere responders to autonomous partners in value creation. Those who balance technological ambition with disciplined governance will reap the rewards of this new era of intelligent agents ahead of their competitors.